Hi folks,

TL;DR: if you like Matrix (and especially if you're building stuff on it), please support us via Patreon or Liberapay to keep the core team able to work on it full-time, otherwise the project is going to be seriously impacted. And if you're a company who is invested in Matrix (e.g. itching for Dendrite), please get in touch ASAP if you'd like to sponsor core development work from the team. And if you're a philanthropic billionaire who believes in our ideals of decentralisation, encryption, and open communication as a basic human right - we'd love to hear from you too O:-)

I was expecting this blog post to be the Matrix Summer Special, focusing entirely on the incredible progress and updates we've made in the last few months in Matrix. However, instead I'm going to talk about something different and literally critical to Matrix's success.

As many people know, Matrix.org development has historically been exclusively and very generously sponsored by a large multinational telecoms infrastructure company for whom most of the core team once built telco messaging apps. However, despite the project progressing better than ever (more on that later), we have just had our funding dramatically cut by >60%. [Update: as of Aug 2017 it is effectively cut entirely, with enough $ left over to cover until end of October.]

We seem to be suffering from a darkly amusing paradox, as the rationale from our corporate overlords is essentially: “Wow! Matrix is doing great and growing well - and you seem to have all sorts of exciting people and companies using and building on it. But we've been footing the whole development bill since the outset in May 2014, and this simply doesn't feel fair. We're happy to keep funding though - but only if others do too!”. In other words, in some ways we are a victim of our own success...

So we now find ourselves in the situation that despite the project looking better than ever and having a tonne of amazing stuff in the pipeline, we are suddenly missing the funding to keep the core team working on it. And the team is quite sizeable - reflecting the ambition and size of Matrix: right now we have effectively 11 people working specifically on Matrix itself: 1 on Synapse, 1 on Dendrite, 1 on e2e crypto, 2 on matrix-react-sdk (which powers Riot/Web), 2 on matrix-ios-kit / matrix-ios-sdk, 2 on matrix-android-sdk, 1 on bridges, and me (Matthew) managing the overall project. (This ignores folks who overlap the team who are working specifically on Riot stuff).

Over the last few years we've had countless people ask if they can financially support Matrix. We haven't been able to accept it for various reasons, but now is the time for us to step towards a more independent setup, and avoid a repeat of the situation we're currently facing by opening up to external support.

So we need help from the community to keep going! Please head over to Patreon or Liberapay and put some money in the meter (or send some bitcoin to 1LxowEgsquZ3UPZ68wHf8v2MDZw82dVmAE or ether to 0xA5f9a4f9E024F6D727f7afdA9257e22329A97485). In return, you'll get to keep Matrix evolving at a decent rate, be a member of the upcoming +supporters:matrix.org group (complete with flair badges!), and other benefits like access to #matrix-supporters:matrix.org - a new dedicated room for prioritised support, discounted goodies from Riot once paid services arrive, access to a weekly supporters-only status podcast(!), and of course receive our eternal thanks. :)

Meanwhile, if you're a company who depends on Matrix: please get in touch. We have the option for you to sponsor core Matrix development (e.g. Dendrite) or for us to provide you with more targeted support or feature development. We're already talking to several organisations who want to accelerate Dendrite specifically - and the more support we have there the faster we can go.

We'd also like to thank UpCloud for sponsoring hosting for the Matrix.org synapse instances - UpCloud has been coping impressively with the massive I/O and CPU/RAM requirements we have, and we recommend them unreservedly for folks looking to run their own homeservers.

Finally, one of the longer term plans to help fund Matrix is to get sponsorship from Riot, once Riot starts offering paid services. So, if you're an investor who's interested in the for-profit sides of Riot (paid integrations and paid Matrix hosting) then please get in touch with the Riot team ASAP!

Moving forward we are confident that we can secure funding, through sponsorship and Riot paid services, but in truth this decision caught us by surprise and so we need help both long term but also right now!

And whatever the funding situation, we're of course always looking for contributions for code, bug reports, or just spreading the word about the project too! :)

🔗Status Update

(or scroll to next section to see why this is bigger than "just" decentralised encrypted communication)

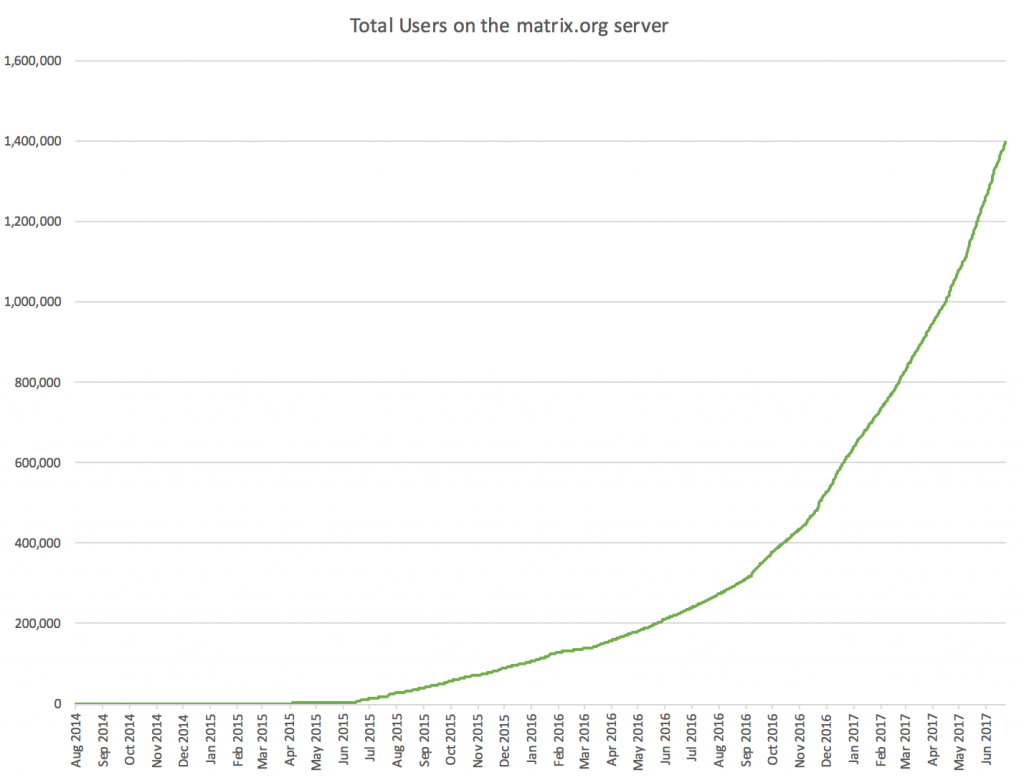

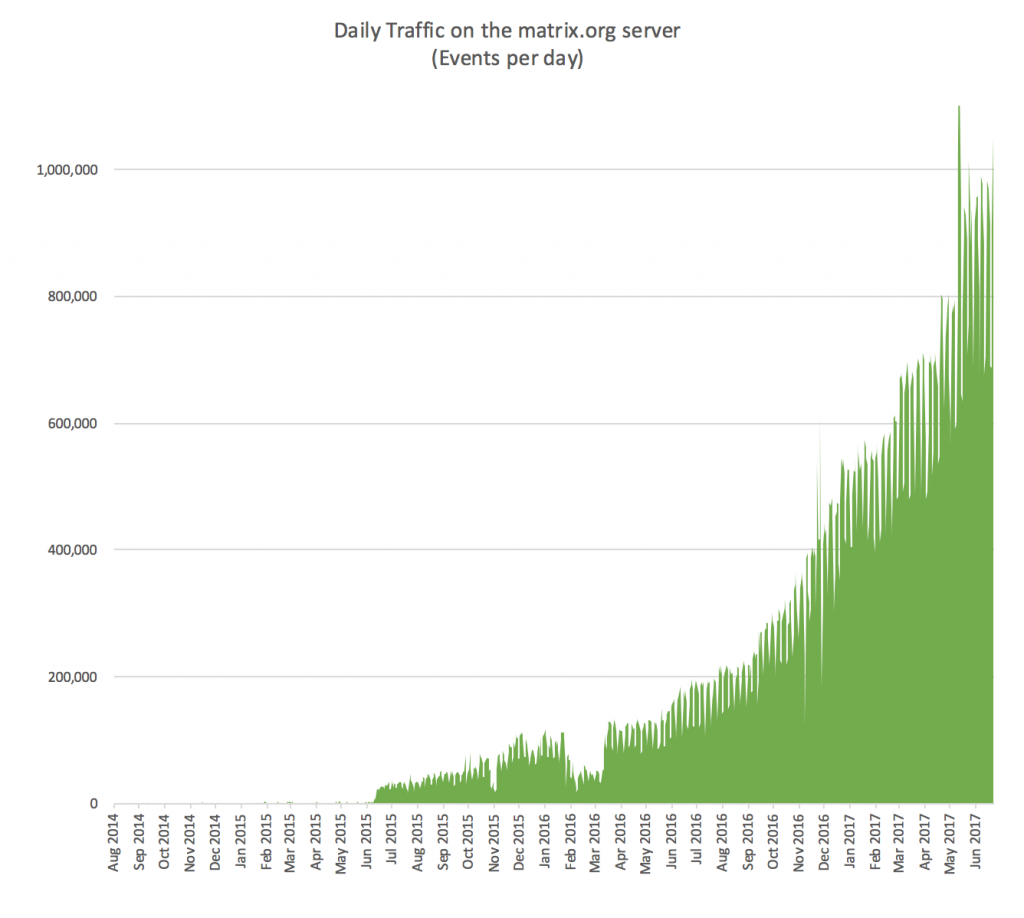

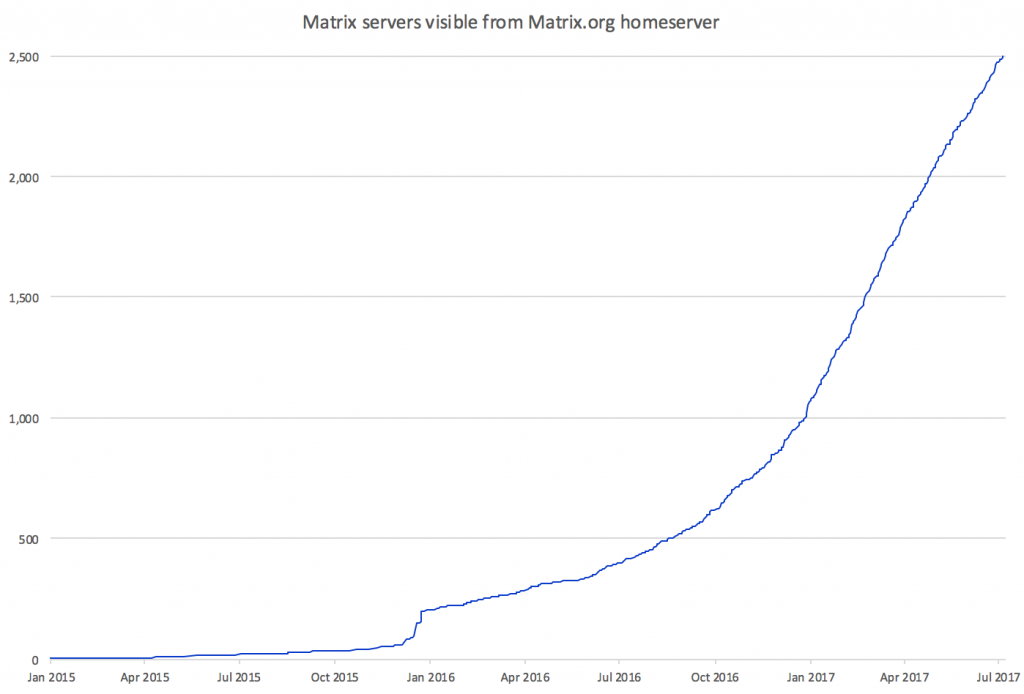

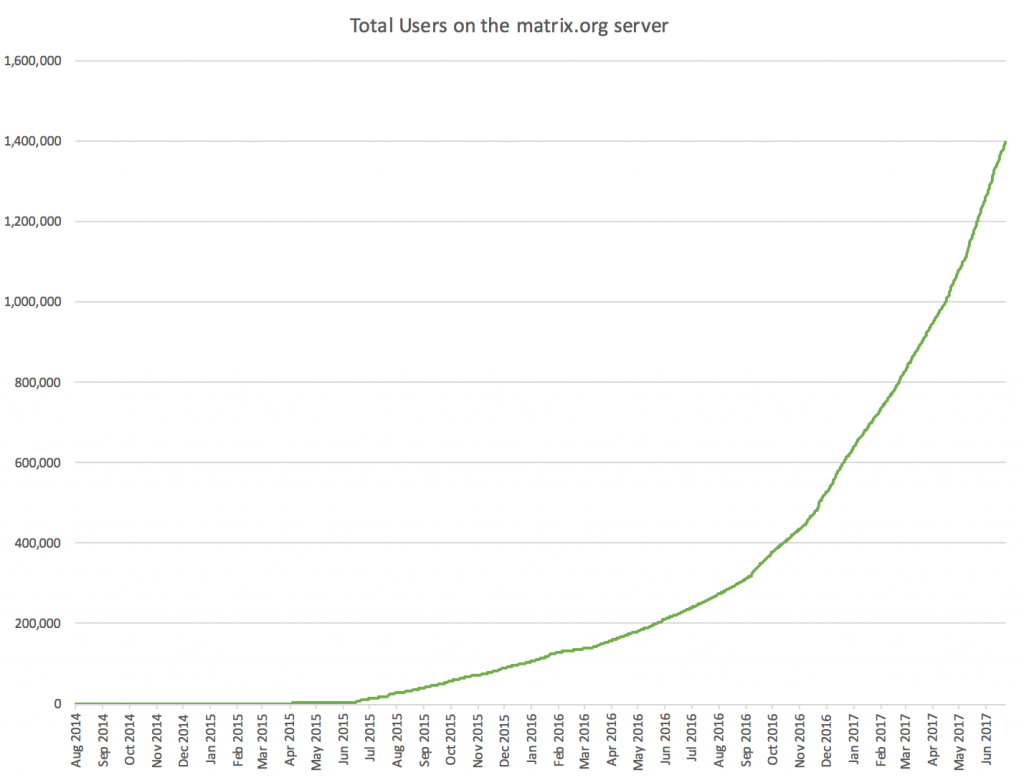

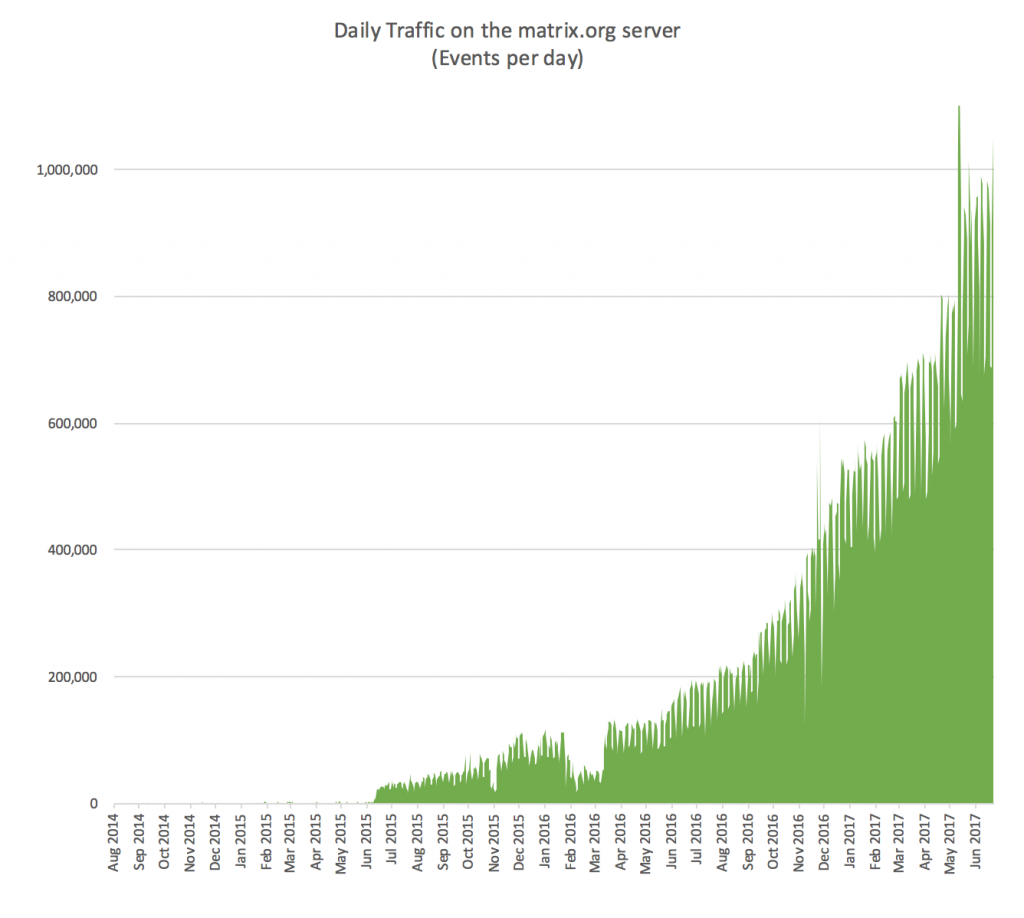

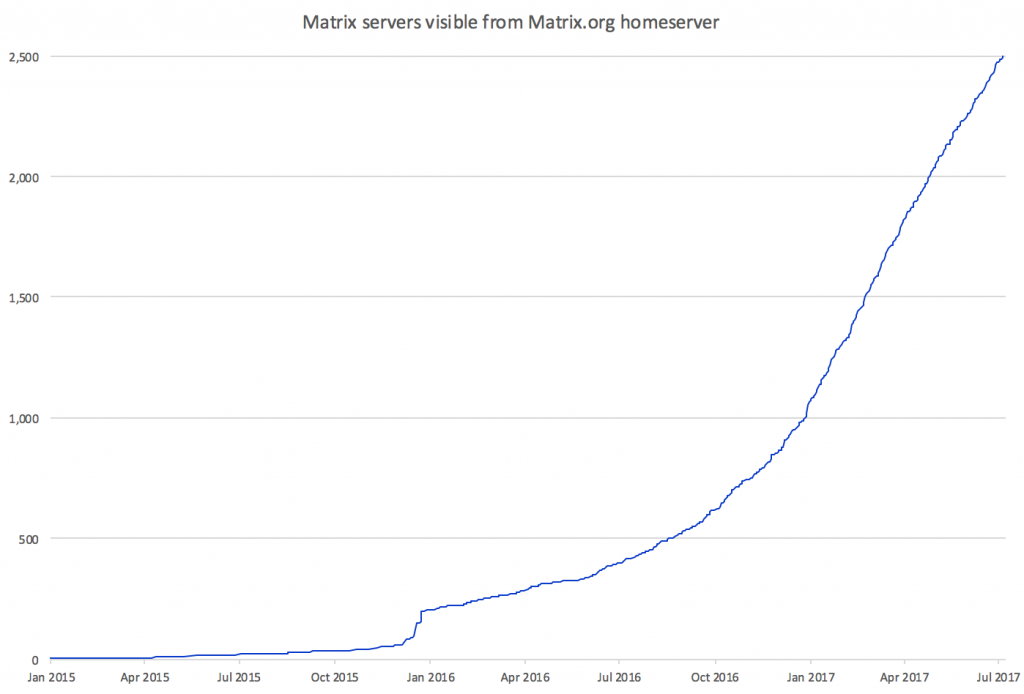

Despite the funding issue, the project really is going very well. Our vital stats (as seen through the lens of the matrix.org synapse) are looking like this:

And meanwhile, looking back at the last big update (Holiday Special 2016), we can compare our progress with our goals for 2017 thus far:

- Getting E2E Encryption out of beta ASAP.

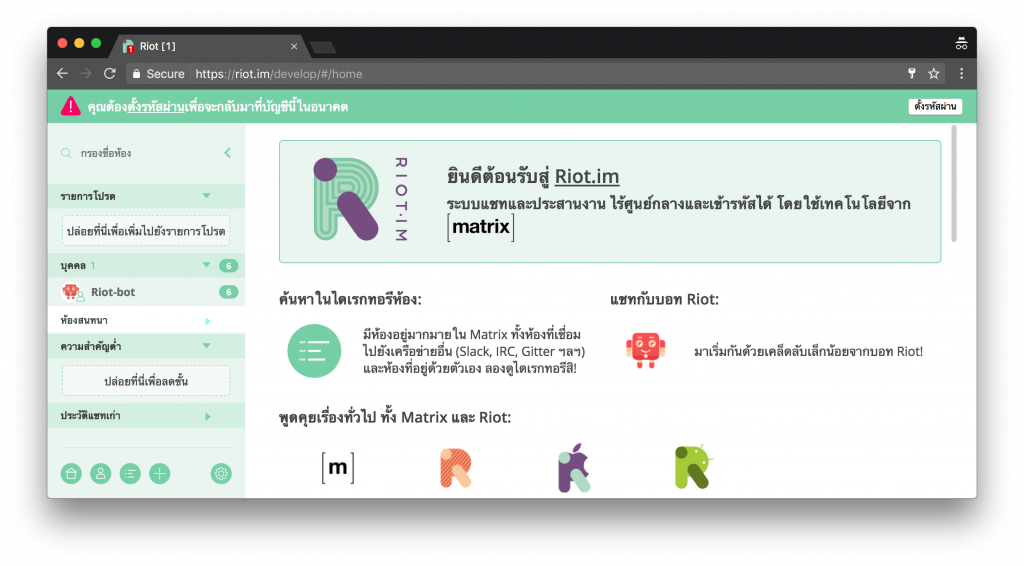

This has progressed massively - we haven't really yelled about it yet, but latest https://riot.im/develop/ now finally implements the ability to share message keys between clients to let them decrypt older history and fix “unable to decrypt” errors (Mobile coming soon). Meanwhile various root causes of “unable to decrypt” errors have been gradually eliminated; I can't actually remember the last time I saw one! Once key-sharing and improved device verification UX is fully tested and tuned we should be able to declare E2E out of beta.

Work on fixing the final causes of "unable to decrypt" (UISI) errors in E2E is progressing well: here's a sneak preview of things to come!! pic.twitter.com/0oGJjm8ZHT

— Matrix (@matrixdotorg) May 25, 2017

- Ensuring we can scale beyond Synapse – i.e. implement Dendrite

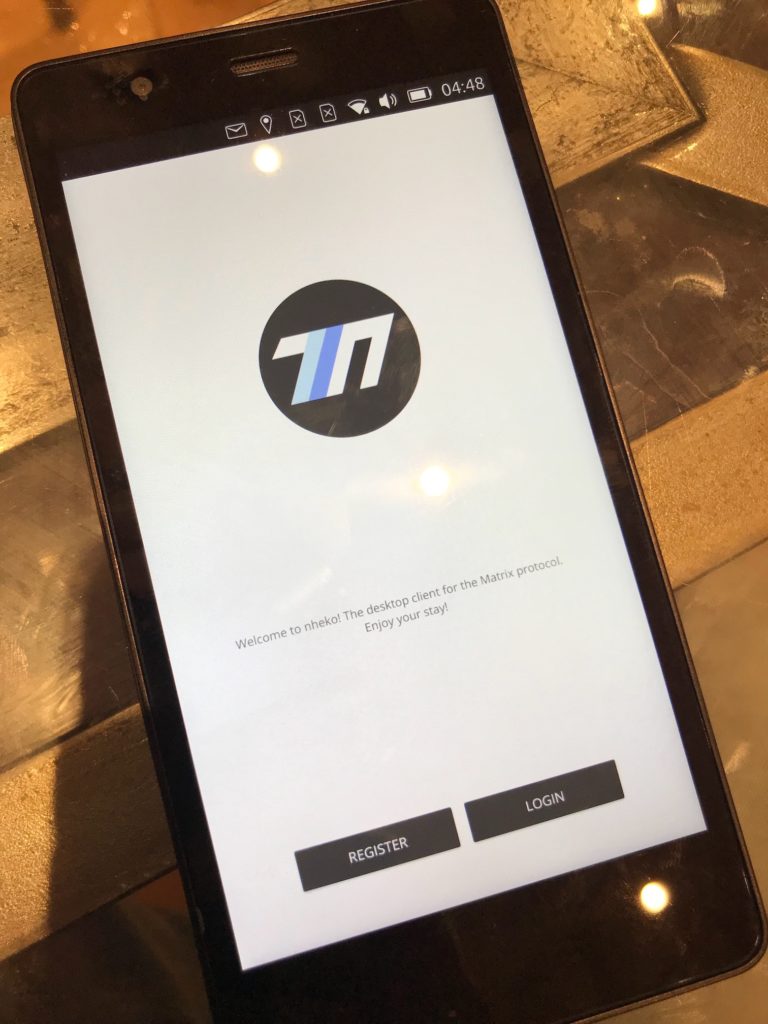

Likewise, Dendrite is on track: we've implemented all the Hard Stuff which forms the skeleton of Dendrite (core federation, message signing, /sync, message sending, media repository etc) - which takes us to over

50% of Phase 1. After phase 1, we will have an initial usable release for all the core functionality. Synapse's performance has also improved enormously this year.

New milestone for Dendrite: sending messages over federation BOTH WAYS between dendrite & synapse! A bit more polish & we can cut an alpha!! pic.twitter.com/DWs6rFqZcQ

— Matrix (@matrixdotorg) June 23, 2017

- Getting as many bots and bridges into Matrix as possible, and doing everything we can to support them, host them and help them be as high quality as possible – making the public federated Matrix network as useful and diverse as possible.

Bridges and bots continue - from the core team we have a ‘puppeting' Telegram bridge (

matrix-appservice-tg), and from the wider community we have

Discord,

Skype,

Signal, new Rocket.Chat and more. Getting them polished and live is certainly an area where we need more manpower though.

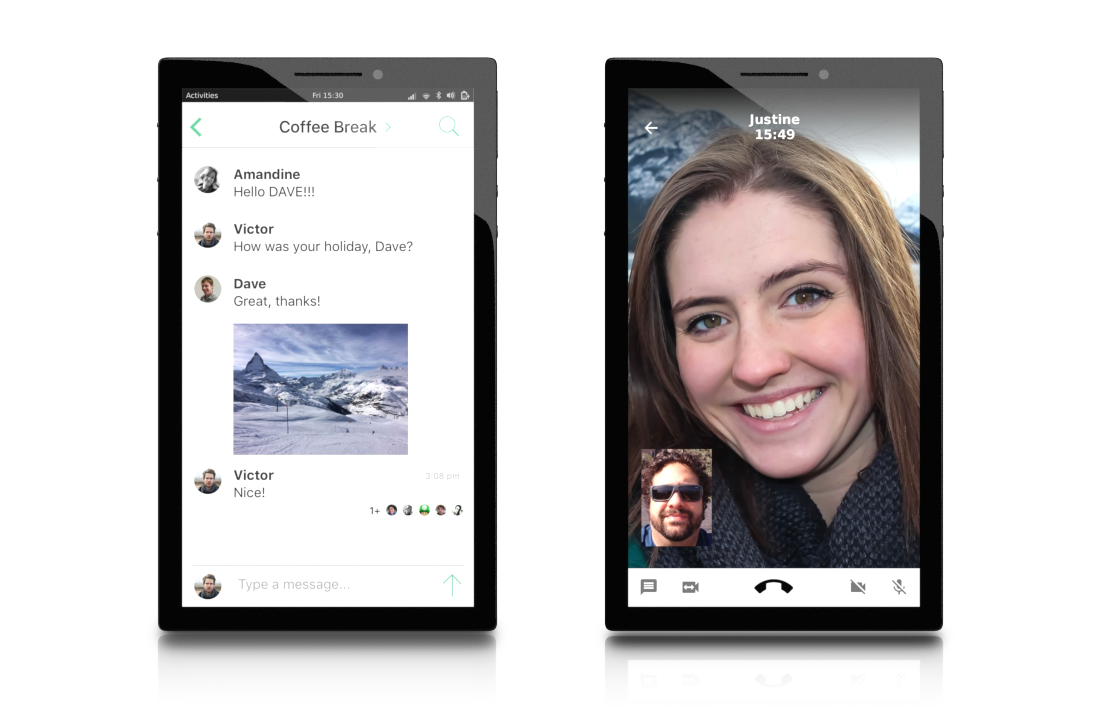

- Supporting Riot's leap to the mainstream, ensuring Matrix has at least one killer app.

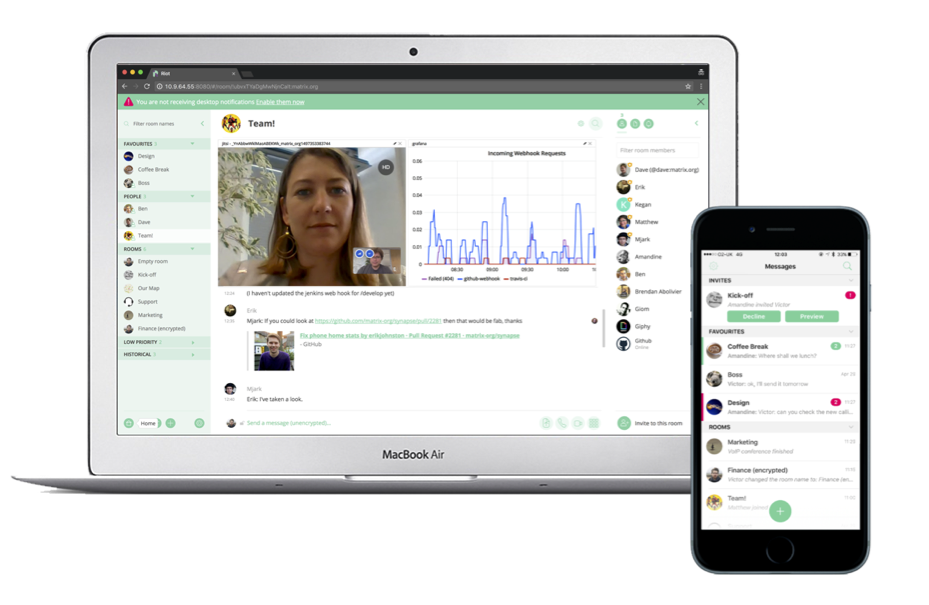

Riot has been

sprouting new releases every few weeks, with a huge emphasis on proving UX:

- an entirely new streamlined sign-up process

- the new concept of home pages

- a user directory search that actually works

- internationalised to 27 languages

- compact layout

- loads of desktop improvements

- piwik analytics support; etc.

There is still a lot of UX work to be done, but it's converging fast on being a great entry point into the Matrix ecosystem, driving its growth across different groups and communities..

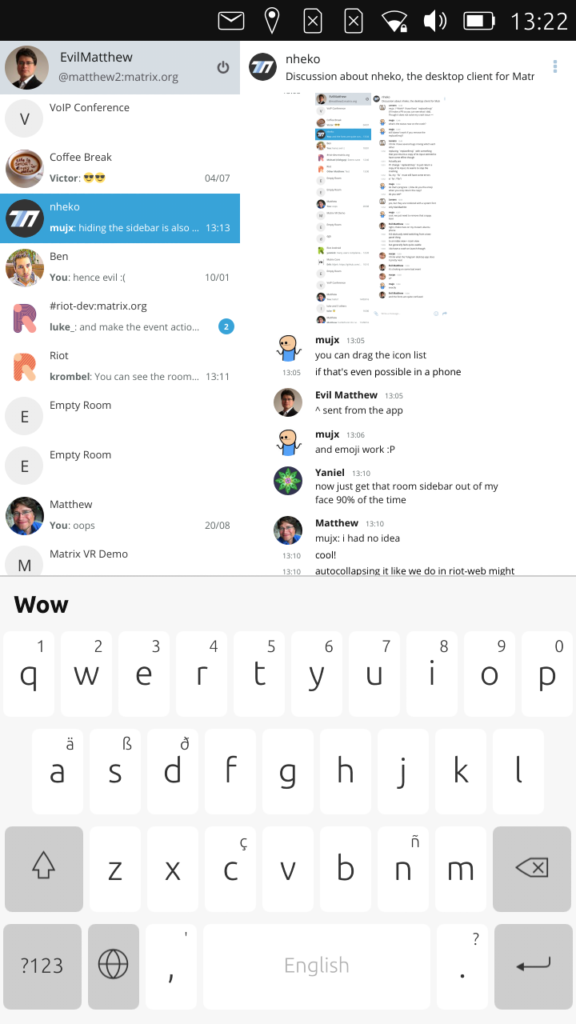

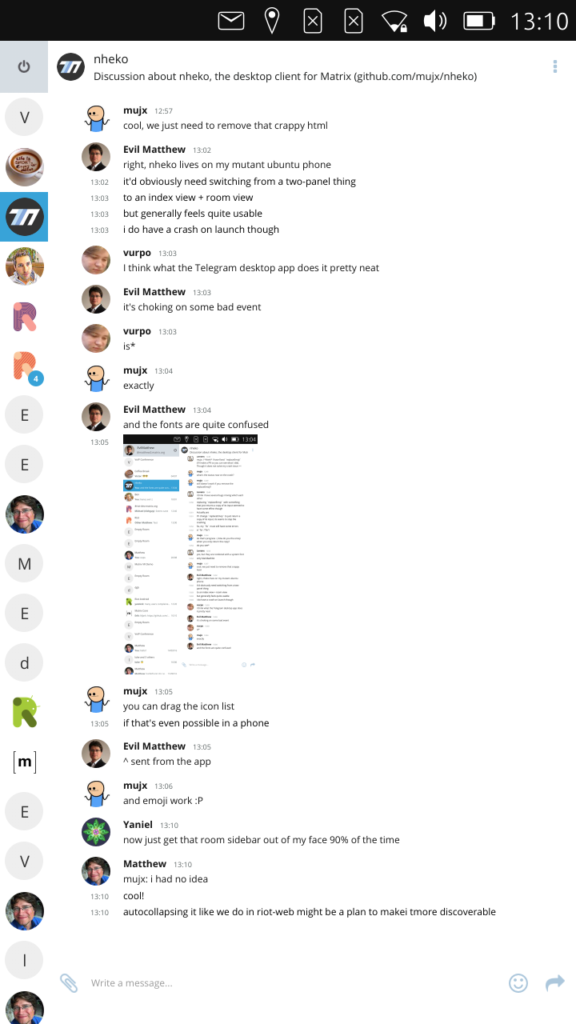

Meanwhile, a massive update to the iOS & Android apps just landed yesterday, switching to an entirely new UI layout to separate People from Rooms, synchronized Read Markers, and more!

- Adding the final major missing features:

- Customisable User Profiles (this is almost done, actually)

This is still hovering at ‘almost done', and will be needed for some of the implementation of Groups (see below)..

- Groups (i.e. ability to define groups of users, and perform invites, powerlevels, etc. per-group as well as per-user)

Groups are also in testing in Synapse too! These will probably be the single biggest change to Matrix that we've seen since E2E encryption landed: it changes the dynamic of the whole network, given users can explicitly declare allegiance to different groups, which in turn have their own home pages and directories etc. It lets users form communities, and declare their participation in those communities (if desired), and also lets rooms be grouped together. One of our single biggest requests has been

“subrooms” and we're incredibly excited to see how well Groups solve this.

Sadly no progress on Threading so far this year.

- Editable events (and Reactions)

We're hoping to get looking at this (at last!) once Groups are done.

- Maturing and polishing the spec (we are way overdue a new release)

You'll have noticed that in the “how many people work on Matrix?” stats above, we didn't mention anyone working on the Spec. Because right now there isn't anyone explicitly maintaining it, unfortunately; updates are done best-effort when everyone's primary responsibilities allow it. That said, there's quite a lot of good stuff currently

unreleased on HEAD. This is something which is obviously critical to fix once we have sustainable funding sorted again. We can only apologise to folks like the

Ruma developers who have suffered from the spec lag. :(

- Improving VoIP – especially conferencing.

VoIP is improving lots on iOS, thanks to Denis Morozov's GSoC project, and meanwhile we have all new conferencing powered by Jitsi on the horizon in the next few weeks too.

- Reputation/Moderation management (i.e. spam/abuse filtering).

Lots of thinking about this (see below), but no development yet.

- Much-needed SDK performance work on matrix-{'{'}react,ios,android{'}'}-sdk.

About 40% of the desired performance work has happened here (although not all has gone live yet).

- …and a few other things which would be premature to mention right now. :D

All will be revealed in the next week or two - but suffice it to say that Integrations are going to be getting a Lot More Useful™. :)

🔗Reflections

There are very very few people actually working professionally on trying to build general-purpose open communication networks and protocols. There's us, some XMPP, IRCv3 and GNU Social/Mastodon folks, GNU Ring, Tox, Briar, Secure Scuttlebutt, IPFS, Status.im, Ricochet… and that's literally all the major projects I can think of (sorry if I missed you!). There's probably only 50 developers in total working in this domain as their day job.

Meanwhile, there are literally hundreds of thousands of folks trudging away building more and more near-indistinguishable proprietary closed communication systems - trapping users inside ever more silos and fragmenting the basic ability to communicate on the ‘net. It's like a world where the open web was pushed into a tiny underground resistance, and everyone else was trapped in the walled gardens of AOL and Compuserve (or more contemporarily: Facebook, Twitter, WhatsApp etc).

In other words: the whole world of decentralised communication desperately needs your support. This is a clear case of user choice and freedom: to give users the ability to pick who they trust with their data and metadata, without being forced into unilaterally trusting the Silicon Valley megacorps. And this, dear Reader, is your chance to fix the world for the greater good. Seriously, the Matrix team is one of a handful in the world in a position to continue to push things in the right direction and avoid us falling into a permanent dystopia where communication is even more closed and proprietary than the Public Telephone Network!

Finally, there's an even bigger issue at stake here than open communication. As an open network, people can literally publish whatever content they like into Matrix - same as the web or the internet itself. As a result, there's scope for spam; abusive/malicious content; propaganda; and generally the whole spectrum of the best and worst of humanity. Now, if we were a centralised system like Facebook, we might hire thousands of content moderators to frantically impose a rulebook on ‘acceptable' content. Or we might build invisible filter-bubbles for our users based on their social graph, cocooning them from scary unfamiliar content outside their social circles and reinforcing their preconceptions (whilst the resulting self-affirmation keeps them coming back, viewing more and more ads).

But we're decentralised, and we have no absolute moral arbiter, and nor should we - on an open network it should be up to users and users alone to define and manage their own worldview and alignment. Plus we are not fiscally obligated to keep users coming back to view more ads no matter what. Instead, we are forced to confront the fundamental problem: building tools which empower users to curate and visualise their own content filters; letting them filter out the stuff they're not interested in or find repellant… while still helping them be aware of their own viewpoint and the shape of the world beyond it. We haven't really started building this yet, but in the long term our feeling is that these tools will literally be vital for the survival of the human race (e.g. exposing anti-climate-change propaganda for what it is or helping users opt out of World War 3) - let alone the success of decentralisation. A world where users blindly consume propaganda is doomed, and it's a fascinating situation that the same tools which will allow Matrix users to tune out the rooms, users and conversations they're not interested in could be directly applied to the bigger global problem.

So: Matrix needs you. Please become a supporter on Patreon or Liberapay, and help us save the world :)

- Matthew, Amandine & the whole Matrix.org team.